Data Vault 2.0 as a new methodology for designing and implementing data warehouses

In our new series of articles, we would like to answer these questions and present what DV is and how to use it to design a data warehouse in accordance with best practices.

What is Data Vault 2.0?

Data Vault 2.0 is more than just a modern way of modeling data. This is a methodology that will tell us how to implement a data warehouse that meets the stringent requirements of modern business. Moreover, it takes into account good practices in creating and maintaining data warehouses in the areas of: data modeling, architecture, project methodology itself and implementation.

It is worth noting at the outset that DV 2.0 is an approach that is characterised by a strong emphasis on auditing and tracking data loaded from many sources. It is a flexible structure that allows you to easily expand your data warehouse with new data or entire areas, as well as implement necessary business changes.

In this article, I will explain the most important components of the DV 2.0 architecture and model, which we will discuss in more detail in the next text. Then we will look at the project management methodology, taking into account the pros and cons of using DV 2.0 in commercial projects.

Architecture

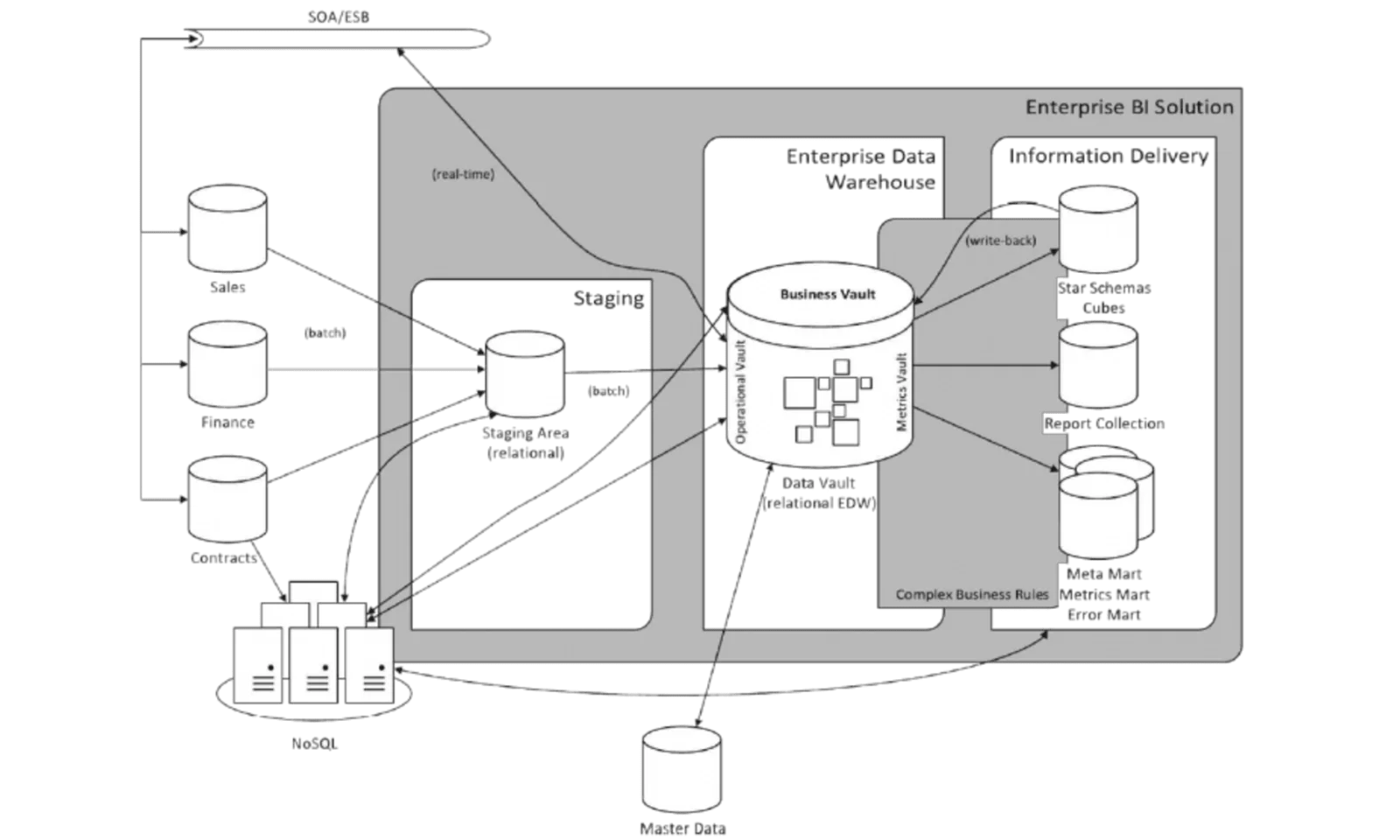

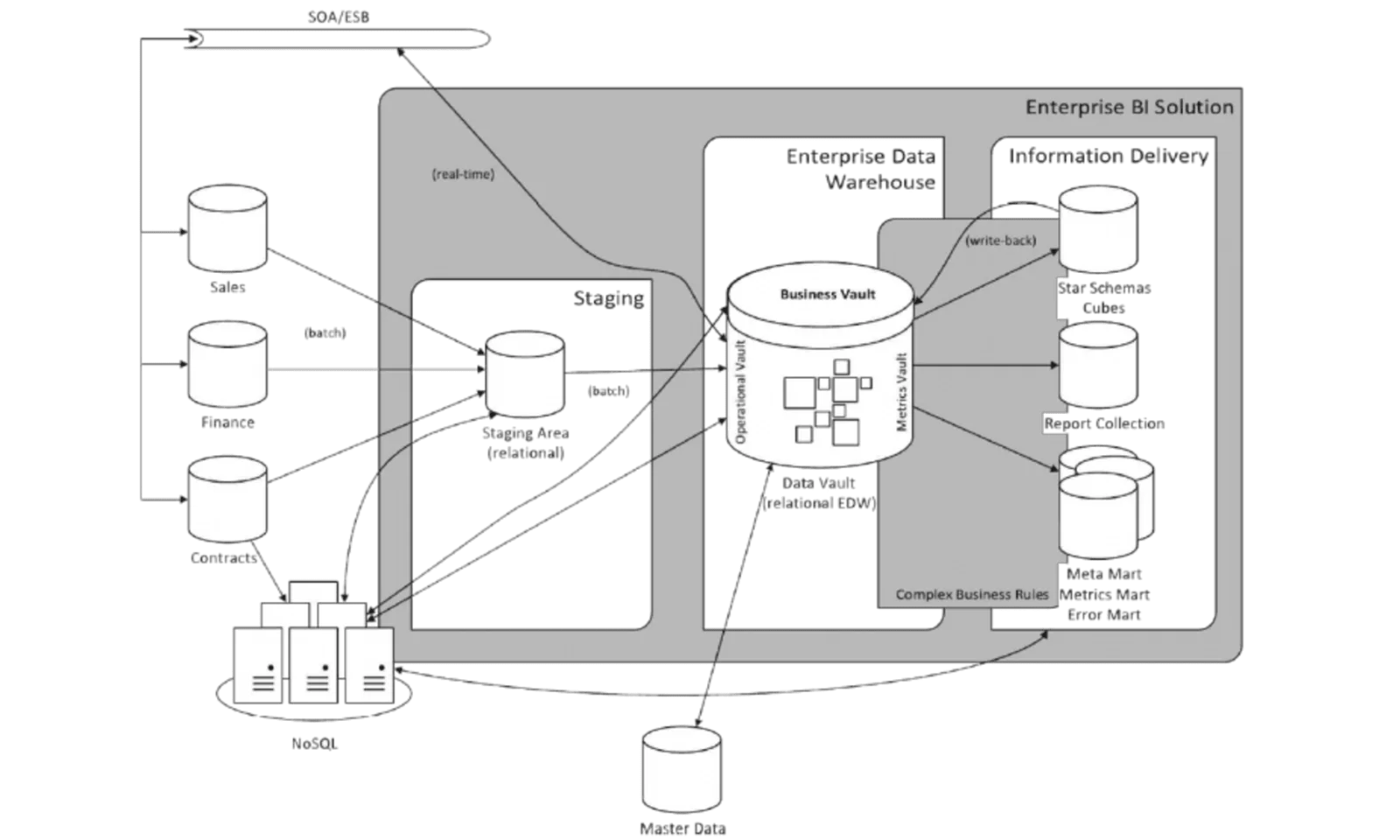

The three-layer architecture of the full solution is presented in Figure 1 and consists of three main layers:

- Staging , where raw data from other systems is loaded,

- Data warehouse layer ( Enterprise Data Warehouse) , with various Vault areas,

- Information layer ( Information Delivery) – containing Information Marts , modeled in a way understandable to the end user, e.g. using multidimensional models.

The three layers mentioned above consist of the following areas:

- Staging – as in other approaches, the main goal of this area is to unload data from source systems as quickly as possible, so as to cause the least possible load on them. The process should avoid any transformations at this stage. The data structure should reflect the structure of the original data as closely as possible, including audit metadata such as timestamps, data source, etc.

- Data Warehouse Layer (EDW)

- Raw Data Vault – a data warehouse layer that, as the name suggests, is intended to store raw data that is loaded from the Staging area . These data are most often subject to minor transformations (so-called hard business rules ), such as normalization, supplementing default values or standardizing data types. The structure of this layer is created in accordance with the modeling principles in DV 2.0 (described below).

- Metrics Vault – an optional area that extends the data warehouse layer with the ability to store information about the process and individual runs, including process metadata and all kinds of technical metrics, such as the number of rows loaded, the number of rejected rows, loading time, etc.

- Operational Vault – an optional area that is an extension of the data warehouse layer with a place that provides data (for reading and writing) needed for operating systems ( Operational Systems ), such as Master Data Management (MDM) . Thanks to this, systems of this type can have direct access to the data warehouse layer and do not have to use the Staging or Information Marts area . Additionally, Operational Vault can be accessed by systems that use raw data for various types of analyses, e.g. those using data mining algorithms and systems that need data in real time.

- Business Vault – another optional area that is not a separate layer, but an extension of the data warehouse layer, where data downloaded from Raw Data Vault is transformed by applying business rules (so-called soft business rules ). These rules, unlike their hard counterparts, transform data according to business requirements. Business Vault is an intermediate element between Raw Data Vault and Information Marts , which makes it easier to later transform data into structures that will be used by end users. By design, users do not have access to the Raw Data Vault , but the so-called power users who can use SQL and relational models can have access to Business Vault .

- Information Marts ( ID ) – a layer created for end users, which stores data transformed into a form understandable by users and applications. The name Information Marts was used by the creators of DV 2.0 not by accident - it is to emphasize that, unlike Data Marts , in this area we already operate on information, i.e. data that is dedicated to a given business area, cleaned and transformed using business rules ( soft business rules ) and potentially aggregated. In other words, prepared for reporting. Information Marts are often built as a Star Schema , which is a model that works well with reporting software. This layer also takes into account areas such as:

- Error Mart – information about errors, rejected records, broken business rules

- Meta Mart – all kinds of metadata.

Unlike other Information Marts , Error Mart and Meta Mart cannot be recreated from raw data ( Raw Data Vault ).

Structure modeling in accordance with DV 2.0

As mentioned above, DV 2.0 is more than just a model, but its main advantage is the structure in which data is stored - it is the core of the entire concept.

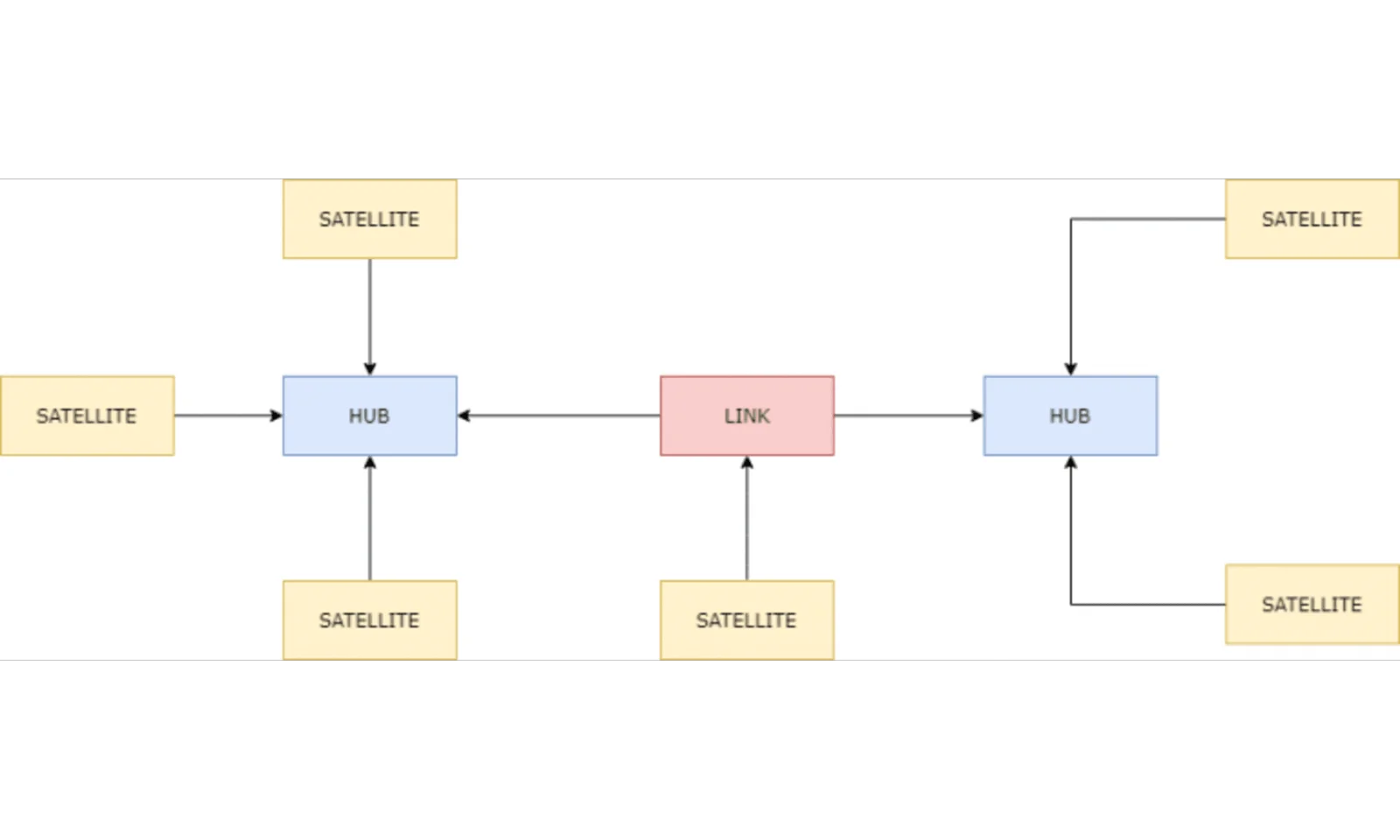

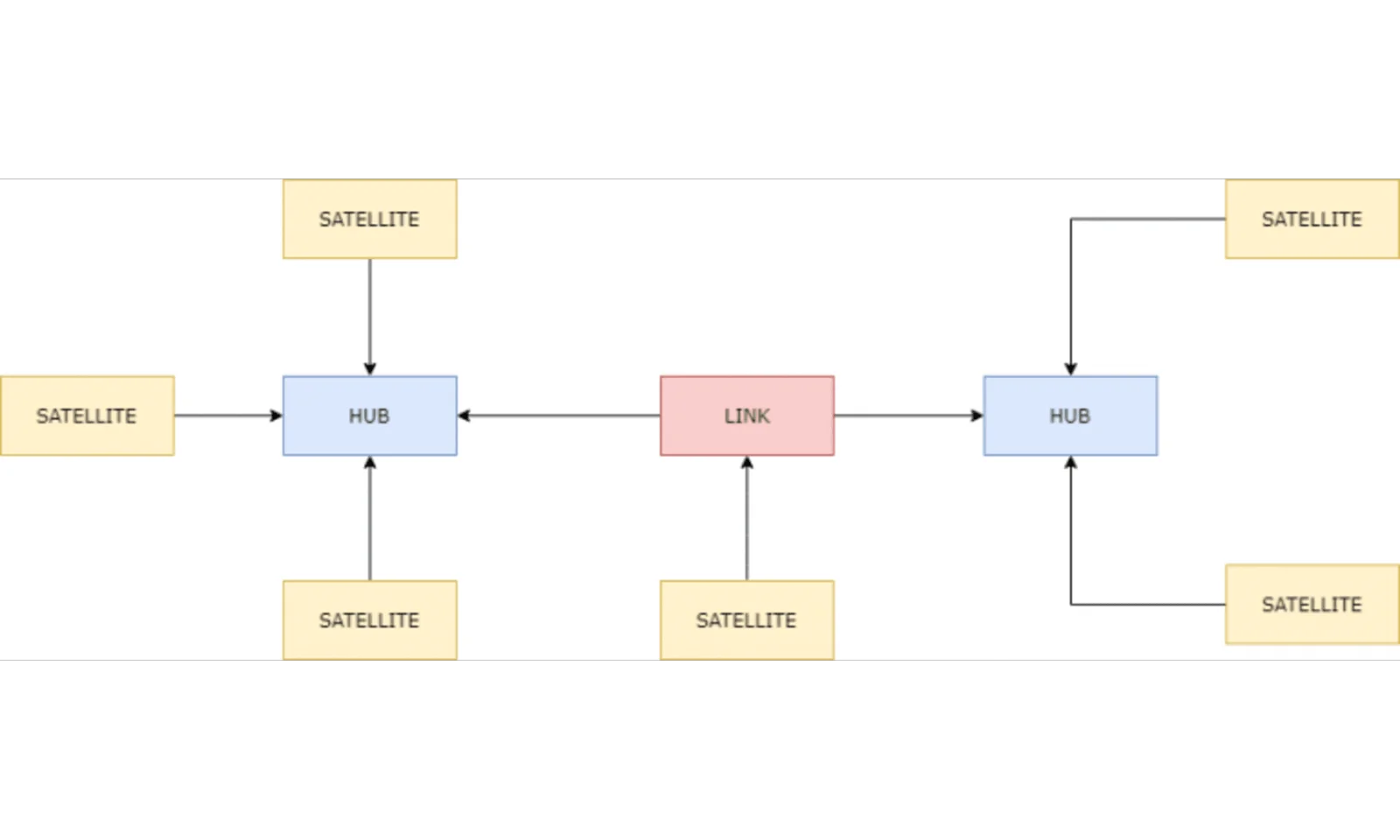

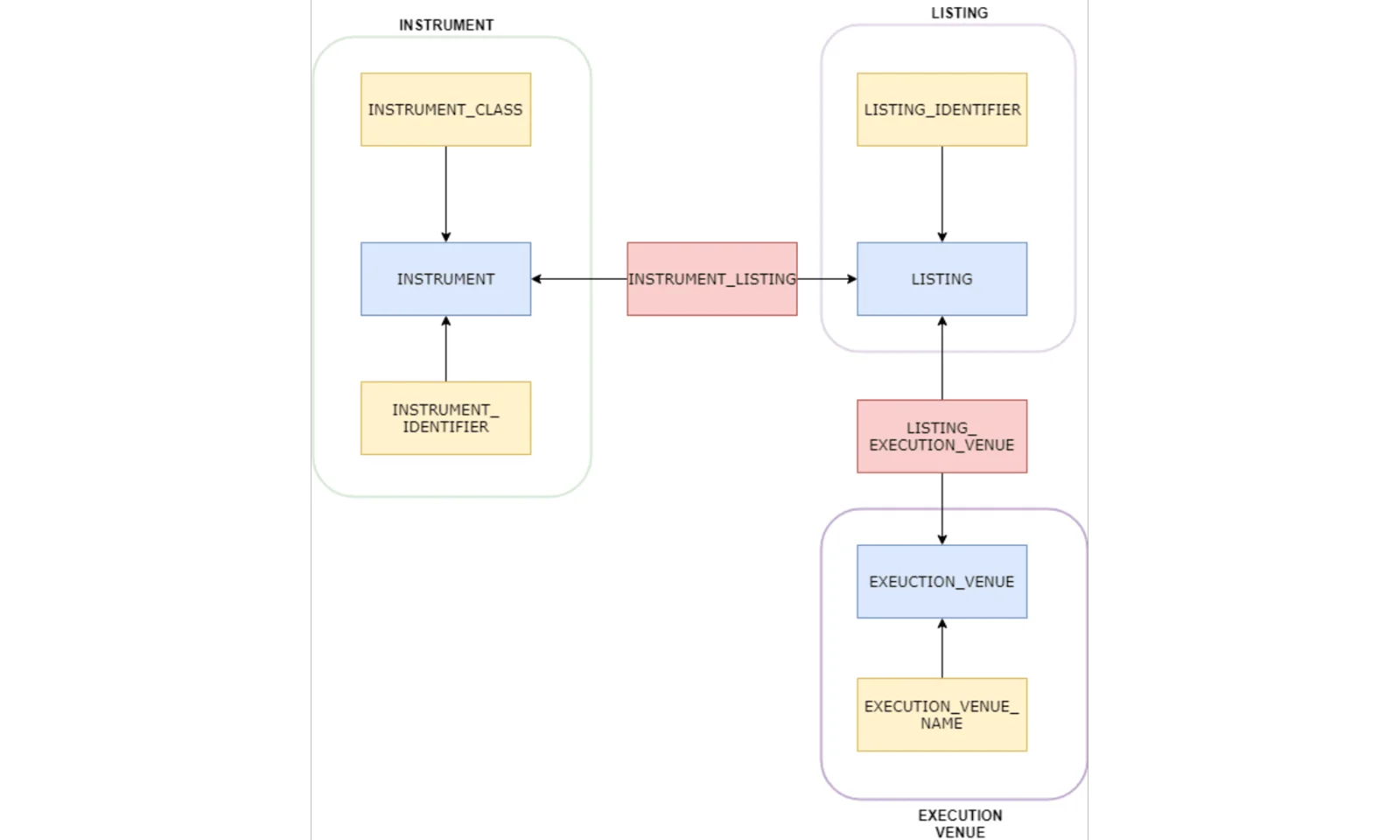

An example model and relationship are presented in the image below.

To put it simply, the main types of entities in DV 2.0 are:

- Hubs – store business keys of entities, e.g. IDs,

- Links – store the relationship between objects by connecting two or more Hubs,

- Satellites – carry additional attributes describing a given object (connected to the Hub) or relationship (connected to the Link).

These are only the basic types of objects used in DV. Moreover, each of them stores appropriate metadata. The objects mentioned above have their subtypes and concepts related to them, which will be explained in the next article.

Using Figure 2 as a representation of object types, let's focus on understanding an example model that already describes a real business case.

As you can see, we have several thematic areas linked together:

- Instrument – an area describing financial instruments,

- Execution Venue – an area describing the financial market,

- Listing – an area describing the Listing of a given instrument, i.e. the existence of a given instrument on the financial market (e.g. on the stock exchange).

This is a simplified model describing the world of financial instruments. Entity names seem to be descriptive, but for the sake of clarity I described them below:

- INSTRUMENT – contains the instrument business key – identifier,

- INSTRUMENT_IDENTIFIER – contains alternative instrument identifiers, e.g. ISIN, CUSIP,

- INSTRUMENT_CLASS – contains information about the class to which a given instrument belongs (e.g. REIT),

- INSTRMENT_LISTING – connects the instrument to the listing,

- LISTING – contains the business key of the listing,

- LISTING_IDENTIFIER – listing identifier (instrument at market level), e.g. RIC, SEDOL,

- LISTING_EXECUTION_VENUE – combines listing information with the market,

- EXECUTION_VENUE – contains the financial market business key,

- EXECUTION_VENUE_NAME – contains the descriptive name of the financial market.

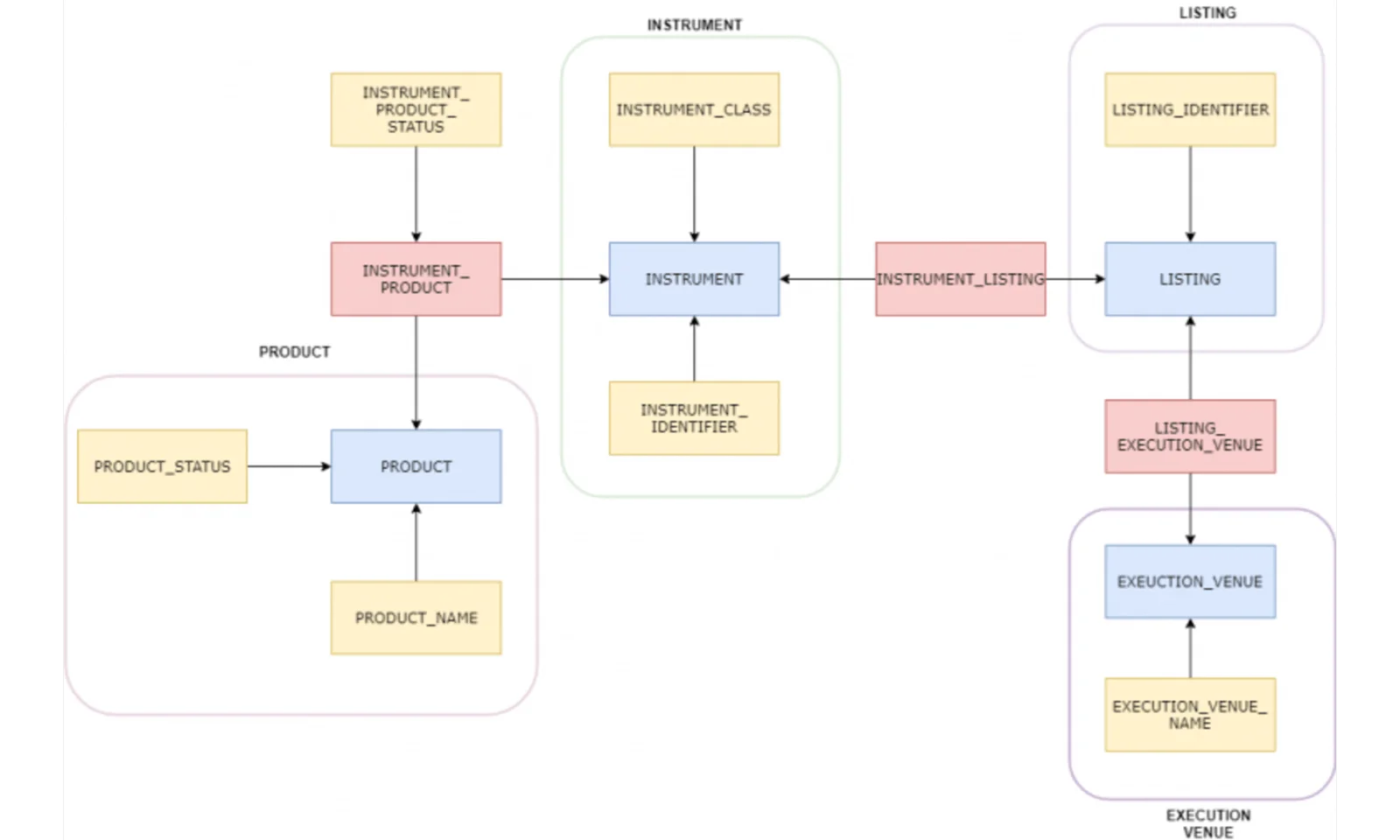

Let's imagine the following situation: we want to expand a given model with a new area containing information about financial products. We know that a given product is associated with an instrument. Do we need to change the existing model? Not necessarily - all we need to do is attach the new area to our model using a Link object (INSTRUMENT_PRODUCT).

As I mentioned above, Satellites entities can describe both Hubs and Links . PRODUCT_STATUS, which provides information about the status of a given product (a product with a given business key), is one thing, and INSTRUMENT_PRODUCT_STATUS, which tells us about the status of the product's relationship with the instrument (combination of two business keys), is another thing. It may happen that both the product and the instrument are active, but the instrument is no longer associated with the given product.

Kimball vs Inmon vs Data Vault

Let's look at one more aspect of the new model and the entire data warehouse implementation methodology: how does DV 2.0 fit into the solutions that have been existing for years?

Ralph Kimball and Bill Inmon proposed their solutions several decades ago and they constituted the core of data warehouses at that time. You can find plenty of reviews of these approaches and their comparisons in various sources. So where does Data Vault fit into this context ? To answer this question, we need to understand what we really want to compare - the models themselves or entire methodologies.

This is important because, as the creator of Data Vault himself, Dan Linstedt, says, Corporate Information Factory ( CIF ) Inmona and Kimball Architecutre BUS are architectural frameworks that tell us what we should have in the data warehouse, while Data Vault Methodology will tell us how to do it. implement it.

As you can see, there are many aspects that should be taken into account, but analysing the solutions very generally, one can be tempted to say that Data Vault is more similar to CIF proposed by Inmon. Here we are also dealing with a 3-layer architecture, and only Data/Information Marts are built using multidimensional structures. So what is the difference between these two approaches? According to Linstedt, the fundamental difference is at what point we apply business rules. In Inmon's approach, data that is loaded into the ODS area ( Operational Data Store ), which is the core of this architecture, is already cleaned and transformed. However, in DV 2.0 , we leave these operations at the very end of processing, loading data into the Business Data Vault or the Information Mart area .

It is also worth mentioning that CIF has evolved (taking into account today's needs) into DV 2.0 and this difference is disappearing. According to Linstedt, Bill Inmon himself stated:

“The Data Vault is the optimal choice for modeling the EDW in the DW 2.0 framework.”

In turn, if we wanted to compare the discussed solution with Kimball's approach, we would have to focus, among others, on: on a comparison of the proposed models: the model used in DV 2.0 and Star Schema. However, this is such a broad topic that it should be analysed separately.

Summary

Data Vault 2.0 certainly has many advantages, including: an exceptionally flexible structure that allows adapting new business requirements and adding additional areas to the warehouse relatively quickly and without requiring major changes to the existing structure. Additionally, the metadata that is kept with business data and the fact that data transformation using the so-called soft business rules take place at the end of the process, facilitate data auditing and enable meeting requirements such as traceability. Moreover, from a technical point of view, the flexibility of data storage structures in DV 2.0 allows the creation of generic data loading mechanisms and the automation of the entire process.

On the other hand, there seem to be few data warehouse management and reporting programs that fully support the DV model . This forces the addition of an additional layer that will transform Raw or Business Data Vault into a form understandable by the end user and/or application - which is of course not a bad practice, but in this approach we have no other option.

To sum up, the concept itself seems very attractive to me.

Thanks to its advantages, Data Vault 2.0 fits very well into the needs of Data Governance, which seem to be crucial in today's world - especially in the context of regulations that financial institutions must meet.

Taking into account the BCBS 239 regulation, which explicitly states the proper definition, collection and management of risk-related data, taking into account principles such as accuracy, integrity, completeness, timeliness and adaptability, DV 2.0 seems to be a solution that can help meet these requirements. Naturally, it is still only (and only) a tool, and it is people who decide whether the above principles or good practices are met - starting from high-level Data Management, up to the delivery of individual solutions.